In my last post, I suggested a list of principles for neural motor control, specifically, that is should be perception focused, causally organized, spatially oriented, planned around waypoints, varying in complexity, event-timed, and spatially dichotomous. In this post, I will propose a set of components to implement these principles.

Biology of the Motor Cortex

But first, a bit of biology.

A block diagram of the cerebral motor system is shown in Figure 1, augmented from a similar diagram in Kantak et al. (2011) expanded below the motor cortex based on the information from Bosch-Bouju et al. (2013).

The motor cortex, comprising the areas in dark blue in Figure 1, lies on a strip across the center of the brain, just in front of a prominent fold called the central sulcus, with the somatosensory cortex just behind it. As an overall system, the motor cortex receives sensory input including direct bodily sensation (from the somatosensory cortex), high-level egocentric perception (from the posterior parietal cortex), and high-level cognition (from the dorsolateral prefrontal cortex). Not shown in the diagram are the frontal eye fields, slightly in front of the premotor cortex and performing eye movement planning. The outputs of the motor cortex can be divided into face and mouth controls, which pass directly to the brainstem and thence to the orofacial muscles, and the remaining controls, which pass through the motor region of the thalamus, where they are integrated with inputs from the cerebellum and the basal ganglia before being passed through the brainstem and into the spine. The goals towards which the motor cortex organizes action may emerge from the mid-cingulate cortex, also known as the dorsal anterior cingulate cortex.

The motor cortex breaks out into three distinct parts. The lowest level of control in the cortex is the primary motor cortex at the rear of the motor cortex. The primary motor cortex can control individual muscles, but mostly it operates at the level of muscle groups, such as an arm, a hand, or a leg. The premotor cortex at the front and sides of the motor cortex computes coordinated plans and is capable of conditional action. It also contains mirror neurons that fire when an action is performed either by the self or another. The supplementary motor area at the front along the midline of the neocortex is responsible for coordinating the timing and execution of complex routines. The main distinction between the premotor and supplementary areas seems to be that the premotor cortex is responsible for external coordination based on spatial perception, whereas the supplementary motor area handles internal coordination.

Each of the three components exists in both left and right hemispheres of the brain, with the left hemisphere controlling the right side of the body and the right hemisphere controlling the left side. For the most part, both left and right hemispheres behave similarly, but there are curious differences. For example, as shown in Figure 3.13 of this textbook, a complex movement of the left hand activates both left and right supplementary motor areas, but when the movement is only imagined rather than performed, only the left supplementary motor area activates even though the left hand is controlled from the right hemisphere. This strange fact may support my primary thesis that language is understood through simulation, given that language is generally processed in the left hemisphere.

The primary motor cortex is somatotopically arranged, which is to say that you can lay out the human body along this strip of cortex in such a way that the parts of the body are controlled by the corresponding part of the primary motor cortex. This correspondence is often referred to as the motor homunculus. The premotor cortex and the supplementary motor cortex are likewise somatotopic in that complex plans for body parts are generated in particular regions of the cortex; however, whole-body coordination necessarily elides these distinctions at the highest levels of motor control.

Examining the connections more closely, we can observe significant relevant substructure. Firstly, the primary motor cortex receives direct input from somatosensory areas, which enables the use of touch feedback at a low level during motor control. This responsiveness is critical for performing tasks where careful contact is involved, as in picking a grape without crushing it.

Secondly, only the premotor cortex has access to high-level perception, which it receives from two sources: (1) the posterior parietal lobe, where sensory information is aggregated from an egocentric perspective, and (2) the dorsolateral prefrontal cortex, which performs high-level cognition. It is worthy of note that in humans but not macaques there are connections from the temporal association areas to the posterior parietal lobe related to tool use (Orban & Caruana, 2014), which may indicate cross-communication between the what and where pathways to support motor control with tools through special spatial modeling of tool behavior.

Thirdly, the supplementary motor area receives strong input from the mid-cingulate cortex, which integrates emotions and senses to perform decision-making. This motor area is most active during proactive rather than reactive motion, agreeing with the idea that it is responsible for internal generation of action, whereas the premotor cortex is primarily involved in responses to external stimuli. The supplementary motor area does not receive substantial direct input from the perceptual system.

Fourthly, all three motor areas connect to motor output at various levels, including through the basal ganglia, the brainstem, the cerebellum, and even the spine. The actual motor signal to the muscles is an aggregate of the signal from all these neural components. No part of the motor cortex controls muscles directly. Instead, the motor cortex issues commands that indicate the direction and force of movement, and the exact muscle contractions are determined by alpha-motor neurons in the spine (or in the brainstem for orofacial muscles). Furthermore, the three components of the motor cortex are not equally connected. In the case of reach behaviors, the premotor cortex does connect to the spine, but its connections terminate around the trunk and shoulders. Their effect is to modulate whole-body movement while the primary motor cortex handles the core reaching activity. Finally, many movements are not generated by the motor cortex but by the brainstem and cerebellum. For example, cats with lesions in their motor cortex can still walk, but can no longer avoid obstacles.

The motor thalamus sits in the middle of the motor pathway and appears to have an integrative role, consolidating motor plans for action. It has a substructure that breaks down into a gradient of regions from those more connected with planning to those more involved in action execution. The planning regions preferentially connect with the premotor cortex and supplementary motor areas, and even with the prefrontal cortex. The execution regions receive more input from the primary motor cortex and the cerebellum.

One theory of the motor thalamus is that the motor thalamus operates on a longer time delay (300 ms, measured through reaction times) than the basic brain frequencies (< 100 ms) so as to consolidate a plan before releasing it to be executed (Bosch-Bouju et al., 2013). In such a framework, the role of reward computations might be to select the most rewarding plan from among multiple competing action plans at the final step before execution.

As a final observation, the role of the basal ganglia in motion planning is curious, given that the basal ganglia are typically involved in propagating rewards, as through dopamine signaling. This involvement has led some to suggest that the thalamus is involved directly in motor learning and adaptation. Two parts of the basal ganglia are involved in motor control, the substantia nigra pars reticulata (SNpr) and the globus pallidus internus (GPi). Both connect into the planning-oriented parts of the motor thalamus. The GPi even connects up to the motor cortex as well. The GPi is regulated by dopamine; when substantial dopamine is present, it will inhibit the GPi, which then disinhibits the motor thalamus, leading to a higher likelihood of movement. Another way to interpret the involvement of the basal ganglia is as a general purpose mechanism for regulating the propensity to act more generally. Without inhibition from GPi, the motor circuits can become too excited and can result in erratic or jerky movements, as in Parkinson’s disease. Tangentially, the GPi circuits when overactive are also potentially involved in a preference for procrastination, and there may even be a so-called slacker gene. But it seems to me that the simplest way to understand the involvement of the basal ganglia in motion as twofold: (1) smoothing out actions as they execute, and (2) conditioning the decision on whether and how to move on a global mood that reflects energy levels and risk tolerance.

The biology of motor control is a complex topic, but the summary above is sufficient to begin unpacking a generalized pattern for motor control.

Hierarchical Motor Control

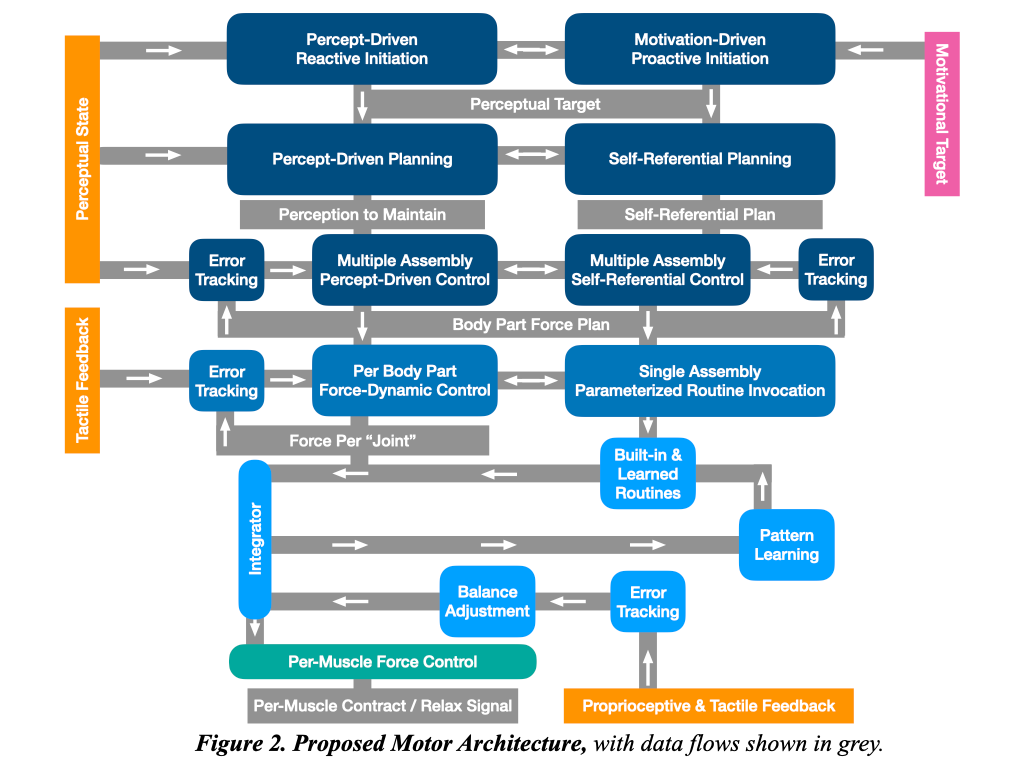

As the discussion above suggests, the motor system is in fact a hierarchy of controllers that operate under different regimes in terms of scope (single muscle to whole body), temporality (short- to long-term), and degree of sensory feedback (low to high). Figure 2 shows an architecture that illustrates one possible interpretation of this hierarchy. The components range from long-term, global planning at the top of the diagram to short-term, immediate implementation at the bottom. The colors are chosen to correspond with the blocks from Figure 1. In this section I will explain the role and function of each component, starting from the bottom of the diagram and working upwards.

To reiterate my standard caveat for these architecture posts, this architecture is intended to reflect a mammal-like motor system, and does not represent a substantive claim about the actual motor system of any animal in particular.

Low-Level Per-Joint Force Control

The output of this architecture force-based control signal at a per-joint level. The word joint requires clarification. When roboticists talk about controlling a robot, they break it down into individual degrees of freedom that are minimal in the sense that each degree of freedom has a state that can be represented by a single scalar value. Each joint might have multiple degrees of freedom; so an elbow has one, a shoulder has two, the waist has three (twist, bend forward, bend to the side), and so on. A force control for a joint indicates the amount of force to apply in each one of the available degrees of motion.

But in a mammal, not all degrees of freedom are associated with joints, nor are all mappings simple. How many degrees of freedom are there in a tongue? Two, six, or eight? How many degrees of freedom are there in facial expressions? Rather than solve these puzzles, I simply use the term joint to refer any colocated set of degrees of freedom that are under unified conscious control. I mainly mean obvious things like a wrist, an elbow, a shoulder, each finger individually, a knee, an ankle, a waist, and a neck. But I also mean things that are less obvious like a tongue, a face, and a tail. The specification of these things is part of the layout of the organism, and I take it for granted that such a layout exists.

The job of the lowest-level controller for a joint in the motor architecture is to translate a force-based signal to answer the question of how that joint should move, as the spine does for mammals. Most typically, we would interpret that answer as an angular force, but in terms of neural signals, it would simply be a magnitude encoded by the total rate of firing across an assemblage of neurons. These force-bearing magnitudes are converted by the lowest-level controller into signals for the contraction or relaxation of individual muscles.

Fast-Feedback Controller for Balance Adjustment

The role of the cerebellum has always been a bit uncertain, given that people lacking this motor organ can still move around, albeit with less fine control. The reason for this is that the cerebellum sits to the side of the motor pathway, monitoring outgoing motor commands and adjusting them. I propose two major roles for the cerebellum that are both in line with the research literature.

The first is that the cerebellum provides a fast feedback loop for correcting motor controls. Human bodies are always changing at various timescales, including short timescales, for example, as when glycogen in the muscles depletes, or when muscle strength increases due to exercise. These changes mean that the response of muscles to neural activity will change as well. If there were no error correction, then this instability would result in erratic control.

Each joint of the body is equipped with neurons that measure the state of the joint. These neurons are pressure-sensitive; by examining the amount of pressure around a joint one can measure the position of muscles If the output of the brain into the spine is a force-based control, then the efficacy of these controls can be checked by comparing the state of the joint after a small time-delay with the command that was previously sent. There is evidence that these time-delays are learned and continuously calibrated in the cerebellum.

The cerebellum, then, is in a position to compare the brain output with its motor effect. It can then issue an adjustment to motor controls so that they will achieve the desired effect. Because of the time delay, this adjustment is not as simple as it sounds; it is not enough to simply correct the differences measured in the past. Instead, the cerebellum must learn a model of the motor system as it is currently operating and then prospectively compensate for errors in outgoing signals.

The effect of this error correction is that higher brain controllers in the brainstem and cortex can learn stable models of motor control that are invariant with respect to the hour-to-hour state of the body. Over time, these higher controllers could also adapt so that the need for error correction from the cerebellum is minimized.

This error correction machinery is shown in Figure 2 under the labels of Error Tracking and Balance Adjustment. They feed into an integrator that represents the role of the motor thalamus. This integrator combines the balance adjustment and error correction into the outgoing force-based per-joint control signal from higher systems so that the overall control of the system is stabilized and improved.

Pattern Learning through a Cerebellar Repeater

The second likely task of the cerebellum involves the learning or caching of motor routines. This proposal is more speculative. That said, it is known that the cerebellum is involved in repeated activity. Since the cerebellum observes outgoing motor commands, it could additionally learn to predict these commands. Predictable sequences could then be reinforced. After enough reinforcement, the higher controllers would only need to send an initial sequence, which the cerebellum would then continue autonomously.

This characterization provides a mechanism for the learning of low-level motor routines as a kind of “language model” predicting motor control step by step. A more sophisticated model could then issue complex adjustments during the recapitulation of a predicted sequence in order to effectively parameterize these learned routines. The routine would stop when the next step is no longer predictable. Overall, it would behave like an extended autocomplete for motor commands.

Figure 2 shows two elements, a Pattern Learning element that observes and outgoing motor commands and learns to predict them and an element for Built-in & Learned Routines that can replicate these learned patterns when appropriately stimulated. The output of the second element would be a force-based per-joint control sent to the Integrator for inclusion in the outgoing signal.

This same pattern of a predictive cache could also be effective for higher level controllers as well.

Abstracting to Body Part Control

I have asserted that the primary motor cortex implements movements at the scale of either individual muscles or body part assemblages. These movements either take place over a short period of time or are cyclic (like walking).

Neurons in the primary motor cortex can flex or relax individual muscles or opposing pairs, but this is not the most common form of control. More commonly, the primary motor cortex initiates or sustains a movement of at the level of body parts, as in reaching, walking, or looking. These types of movements are demonstrated graphically in Figures 3.7, 3.8, and 3.9 of this online neuroscience textbook.

The concept of a body part is part of the design of the organism; it is simply a level of hierarchy with little intrinsic meaning. That is, a body part is a collection of joints that are unified at this particular level of control, and their unification at this level of control is what makes them a body part.

In the architecture of Figure 2, the role of the primary motor cortex is represented as a body-part controller that transforms higher-level commands specified in terms of body parts into per-joint force controls. At this stage, I presume there is no communication across body parts; each body part is managed by a separate controller. To aid in its task, the controller has access to direct sensory input in the form of touch and proprioception only from the body part in question.

As with the proposed error correction in the cerebellum, the body-part controller could likewise use tactile and proprioceptive feedback to measure the outcomes of its commands and correct itself over time. In addition, sensory feedback could be used to immediately respond to unforeseen problems by aborting motor controls when trouble is encountered.

Consider what the limitation to tactile feedback means: the body-part controller cannot implement visual or auditory feedback, as it does not have access to this information. Hand-eye coordination, as one example, must be handled at a higher level.

On the other hand, the body-part controller is perfectly capable of executing a motion independent of vision or hearing such as, say, crossing one’s fingers behind one’s back. Such actions might be thought of as a path through space over time; conceptually we might imagine specifying a position p(t) or a velocity v(t) or even an acceleration a(t), where t indicates time from 0 at start to 1 at finish. Such paths could further be parameterized through linear transformations of time p(kt + b), where k indicates dilation or contraction of time and b indicates phase offset.

From the point of view of the body part controller, there is no difference between following these self-referential paths versus following a path tuned to visual feedback, hence both types of inputs are shown with one label as “Body Part Force Plan” in Figure 2.

Importantly, there is no reason the body part controller cannot receive a command to simply move one joint within the body part. I am not saying that the individual joints are invisible to higher controllers, but rather that the preferred mode of control pertains to assemblages of joints.

Another role for the body part controller might be to initiate or maintain a learned routine. The output in this case would not be a per-joint force control but rather a signal to cause the initiation of a routine. This is shown in Figure 2 as Routine Invocation, but if the interpretation of a routine as a continuation of an initial signal is correct, then there may not be a significant difference between force-based joint control and routine invocation, which would simple repeat or extend a fixed sequence of initial force controls.

Coordinating Across Body Parts

Whereas the body part controllers would transform body part instructions into per-joint commands, the next set of controllers up would transform spatial and self-referential plans into body part instructions. These two aspects — spatiality and self-reference — represent the distinction between the premotor cortex for spatiality and the supplementary motor area for self-reference.

I have not written about self-reference thus far, mainly because self-reference could be modeled as a particular kind of external reference. However it is a fact that the supplementary motor area is separate from the premotor cortex in terms of function and connectivity, and its function involves sequenced movement of the self in unobstructed space. Therefore this area must use some model of the self that is disconnected from either egocentric or allocentric spatial models. I will not explore the nature of this model now but will return to the topic in time.

In any case, the goal at this level of control is to transform plans into controls at the level of body parts. Let us call this the plan execution layer with two separate modules, one for spatial plans, shown on the left side of Figure 2, and one for self-referential plans, shown on the right of Figure 2. Each of these plans coordinates one or more body parts, hence the term Multi-Assembly.

On the spatial side, a plan would consist of list of concurrent spatial relationships to be maintained, with at most one such relationship per body part. This implements the architectural principle of spatial orientation. The spatial plan executor transforms this list into a set of instructions, one per body part, that will maintain the relationship. To perform the task, the executor has access to the output of the perceptual where pathway as well as the cognitive pathway. This dual source of perceptual data implements the principle of spatial dichotomy in motor control. As with the body part and joint controllers, I assume there is an error tracker that compares the outcome of those instructions in perceptual space with the goal, which can be used to correct future instructions.

On the self-referential side, there would be a self-referential plan of some kind that would be similarly converted to a force plan for individual body parts. To perform this task, the spatial and self-referential plan executors should be able to communicate and cooperate. The self-referential executor should also be able to compare its progress with the plan for error correction.

Motion Planning for the Whole Body

The purpose of planning is to convert a goal into a series of steps that will achieve the goal. These steps can then be parceled out to the plan execution layer one at a time. In line with the distinction between the spatial and self-referential movement mentioned above, I assume two separate planners in Figure 2, one of them spatial and the other self-referential. In either case, I assume the two pieces work closely together in order to generate a coherent plan in two parts, one spatial and one self-referential.

Movement plans include timing and coordination effort. Internal timing is handled by the self-referential planner, and external timing by the spatial planner. In any case, as discussed in the last post, the timing of movement is synchronized with respect to events, external or internal.

A step in a plan would be a collection of spatial or self-referential propositions emanating from the perceptual system that are to be maintained simultaneously. The overall plan, then would have a graph-like structure in which each node refers to a binary (or unary) spatial relationship with a subject and object that is to be maintained or monitored. Generally, each node would refer to exactly one body part or joint, but there may be special cases. Consider clapping, for instance, in which both hands must be brought together with force. This could be viewed as a self-referential plan, or it could be viewed as a pair of spatial relationships in which one hand moves towards the other. For the left brain, which controls the right hand, the right hand is the figure and the left hand is the ground. For the right brain, the left hand is the figure and the right hand is the ground.

A node in a plan graph could pertain to an external percept that is not under control. For example, the plan to clap your hands when the bell rings would have the following steps:

Bring hands to face each other, horizontal to the ground.

Someone rings a bell.

Bring hands together with force.

The external, uncontrolled event of the bell ringing is thus part of the plan, even though it cannot be controlled. Such events represents conditional action, which is handled in the premotor cortex. Such events could also represent the action of a compatriot, detected by mirror neurons. Such interactions would enable cooperation in a shared plan among multiple individuals.

Planning itself can be performed with respect to a type graph in which actions connect object space. I have been suggesting this point of view in prior posts on spatial relationships and motor control. That is, the plan begins with a goal specified in perceptual terms. The organism observes how perceptions change over time and learns to predict which states precede and follow others. Then, when presented with a current state and a desired state, the planner can work forwards and backwards using an algorithm like the A* heuristic from classic AI in order to construct a sequence of state transitions that will lead from the current state to the goal. Each transition is associated with one or more actions, and paths so discovered can be assessed for level of effort and coordinated with the motivational system to identify those with minimal effort and highest success probability.

On this last point, transitions do not occur in isolation but with respect to a current state of the world, including obstacles to be avoided. To plan around these obstacles, taking advantage of the perceived state of the world, the spatial maps must be repeatedly queried. To connect back to my thesis on language understanding: This repeated querying and planning may provide the substrate of simulation that represents semantic understanding of language.

A motor plan incorporates the state of the world by moving around, towards, or through the objects perceived out in the world. Each of these actions is itself the maintenance of a spatial relationship from some state to another state. This is the meaning of the claim that motor planning is performed with respect to waypoints in perceptual space, as was discussed in my post on the principles of motor control.

Initiating Motion, Proactive and Reactive

The final step for the motor system is to look at why planning is initiated and where the goals originate. Here again there is a dichotomy. On the one hand, the premotor cortex is wired to spatial perception and can generate reactive responses to sensory stimuli, whereas on the other hand the supplementary motor area receives input from the cingulate cortex in the motivational system to initiate proactive responses to the environment based on the anticipation of reward. Thus there are two modes by which actions begin, proactive and reactive, that enter the motor system through different pathways, although the proactive system is dominant over the reactive and usually inhibits reactive responses.

The existence of a reactive pathway is perhaps surprising. We are all familiar with reflexes, in which our bodies move without us willing them to do so. Similar automatic perception-action loops are present at various levels of the motor hierarchy. But they are also present near the top of the hierarchy as well. McBride et al. (2012) review phenomena in patients with damage to the supplementary motor area such as alien hand syndrome, in which patients will unconsciously pick up a cup or other object simply because it is there, or utilization behavior, in which patients will “use” an object placed in front of them despite not wanting to and trying to stop it. These disorders appear to be a failure of inhibition, potentially originating from the right inferior frontal gyrus (McBride et al., 2012) and mediated by the supplementary motor area.

I interpret these syndromes as revealing the relatively automatic function of the premotor cortex in response to perception. Whenever a commonly used object is observed, the premotor cortex plans a motor response and begins to act on it. This action will proceed unless canceled by the supplementary motor area on behalf of the motivational system. In this way, the premotor cortex can get ahead of the motivational system so that it is ready to act in response to perception.

The top left side of Figure 2 shows this perception-driven reactive initiation of action. This component of the motor architecture would be triggered by the incoming perceptual stream to anticipate future goals and cue up percept-driven planning. This plan would then be confirmed or rejected through communication with the proactive system based on reward cues.

Thus whereas the left side responds to anticipated action, the proactive initiator operates based on anticipated rewards calculated in the motivational system. The motivational system generates an event that triggers the proactive system, which then engages the reactive system as well as the self-referential planner to begin planning an action.

Conclusion

I promised to develop a motor architecture that would be perception focused, causally organized, spatially oriented, planned around waypoints, varying in complexity, event-timed, and spatially dichotomous. The motor architecture laid out in Figure 2 implements these principles. Several concepts still need to be clarified, such as the idea of a self-referential plan, the exact mechanisms of spatial plan construction, and the methods by which such a motor system can be learned through interaction with the world.

This post completes the series I began three months ago on the subcognitive architecture. The purpose of the series has been to understand the substrate on which cognition operates, so that I could make more informed claims about phenomena that appear at a higher level of consciousness, such as causation, semantics, metaphor, and reasoning.

Among the next posts, I would like to step back and take stock of the claims that I have made that are either uncertain, speculative, or novel. In future posts, I hope to delve deeper on these claims. Beyond these subjects, I think it would make sense to focus on how learning and adaptation could function within such a system, particularly in light of current developments in artificial intelligence. I also think that there is much more to say about cognition proper as an outgrowth of the allocentric navigation system. These topics will then bring us back to the topic of language in due time.

Thanks for reading, and if you have any suggestions, please leave them in the comments below!